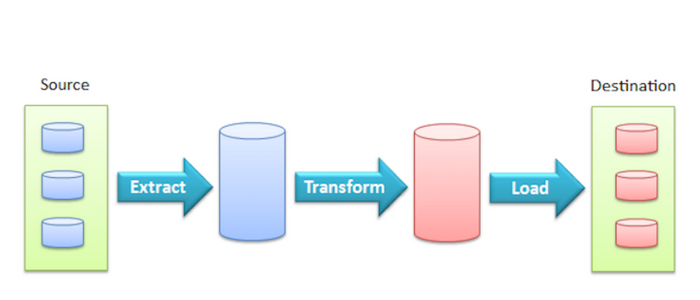

Writing endless data transformations wasn't sustainable for an engineering team handling hundreds of inputs. Here's how Clover Health enabled their business users to help.

It's rare to find an ETL system that's completely static. As organizations change and grow they develop new business requirements. Because of this their data pipelines must change and adapt, ultimately becoming more robust and full-featured. Yet constant development can make already brittle ETL systems seem even more fragile.

Furthermore, systems with large numbers of different types of inputs bring special challenges - building, testing and managing an exploding number of data transformations can become a daunting project for the engineering team.

The Clover Health ETL system supports hundreds of inputs and more than 500 custom transformations in production as well as a large number of custom connections between their different ETL pipelines. When hearing about the magnitude of the system, one might rightfully wonder, "how does Clover guarantee and maintain data quality across so many different inputs and transforms?"

Exploring the development trajectory of Clover's system makes for a fascinating story; hearing about their data team's successes and pitfalls are illustrative lessons to other engineers as they seek to increase the robustness of their own ETL systems.

.png?width=347&height=97&name=Data%20Council%20AI%20logo%20(1).png)