Data Council Blog

How Dremio Uses Apache Arrow to Increase the Performance

(Image source: http://arrow.apache.org/)

What if all the best open-source data platforms could easily share, ("ahem,") data with each other?

As data has proliferated and open-source software (OSS) has continued to dominate both the stacks and the business models of the top tech companies in the world, the number of different types of data platforms and tools we've seen emerge has accelerated.

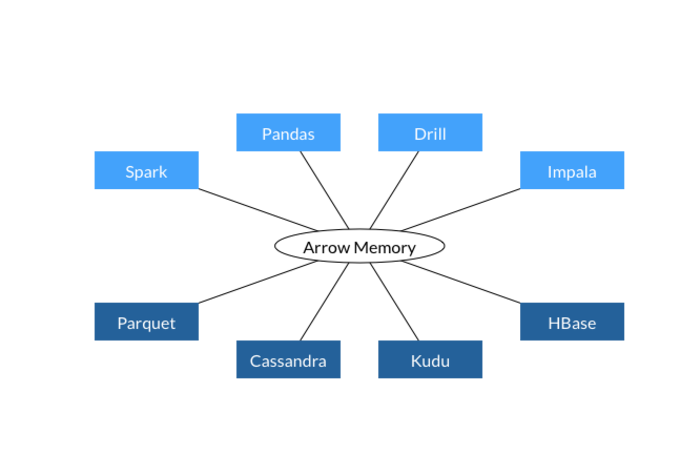

Having a hard time keeping up with the differences between Kudu, Parquet, Cassandra, HBase, Spark, Drill and Impala? You're not alone, and obviously this is one of the reasons we bring together top OSS contributors to these platforms to share at DataEngConf.

But there's one new innovation that attempts to bind all the above projects together by enabling them to share a common memory format. It's a new top level Apache Project called Arrow that aims to dramatically decrease the amount of wasted computation that occurs when serializing and deserializing memory objects. The serialization pattern is commonly used when building analytics applications that interact between data systems which have their own internal memory representations.

Watch the video below for a very quick intro to Arrow where Wes McKinney (Apache Arrow PMC) explains at DataEngConf NYC '16 how Pandas is making use of Arrow to speed up working with large datasets.

And I'm happy to announce that at this year's DataEngConf NYC, we're featuring a newly launched Bay Area company, Dremio, who has become one of the early success stories of Apache Arrow by integrating it into their production platform.

Meet Jacques Nadeau of Dremio

Jacques Nadeau is co-founder and CTO of Dremio. He is also the PMC Chair of the Apache Arrow project, spearheading the project’s technology and community. Previously he was MapR’s lead architect for distributed systems technologies. He was also cofounder and CTO of YapMap. Prior to YapMap he was director of new product engineering with Quigo (acquired by AOL in 2007). He is an industry veteran with more than 15 years of big data and analytics experience.

In his talk, "Using Apache Arrow, Calcite and Parquet to build a Relational Cache," Jacques will discuss some of the learnings that he and his team discovered in their quest to build a sophisticated caching system based on three layers: in-memory caching, columnar storage and relational caching.

Dremio's performace gains were significant, and Jacques will explain how Arrow was a core component of their strategy, enabling an architecture that wasn't previously possible.

For example, you'll hear about how they utilize Apache Arrow for in-memory execution which enables them to rewrite query plans to leverage data reflections so that queries can run in a fraction of the time compared to typical distributed SQL engines. Jacques will also talk about how Dremio translates query plans into different languages (Elasticsearch DSL + painless, MongoDB Query Language, SQL dialects, etc.).

And for another fascinating topic in Jacques' talk, you'll hear how Dremio's Arrow-based architecture enables "virtual datasets" that can join data across multiple systems, including Elasticsearch, MongoDB, Amazon S3, Hadoop and legacy RDBMSs.

Want to hear more talks like this? Join us at DataEngConf SF '18 and be part of the biggest technical conference around data science and engineering.

Subscribe to Email Updates

Receive relevant content, news and event updates from our community directly into your inbox. Enter your email (we promise no spam!) and tell us below which region(s) you are interested in:

Fresh Posts

Categories

- Analytics (15)

- Apache Arrow (3)

- Artificial Intelligence (7)

- Audio Research (1)

- big data (7)

- BigQuery (2)

- Careers (2)

- Data Discovery (2)

- data engineer salary (1)

- Data Engineering (46)

- Data Infrastructure (2)

- Data Lakes (1)

- Data Pipelines (6)

- Data Science (33)

- Data Strategy (14)

- Data Visualization (6)

- Data Warehouse (10)

- Data Warehousing (2)

- Databases (4)

- datacoral (1)

- disaster management (1)

- Event Updates (12)

- functional programming (1)

- Learning (1)

- Machine Learning (18)

- memsql (1)

- nosql (1)

- Open Source (21)

- ops (1)

- postgresql (1)

- Redshift (1)

- sharding (1)

- Snowflake (1)

- Speaker Spotlight (5)

- SQL (2)

- Startups (12)

.png?width=347&height=97&name=Data%20Council%20AI%20logo%20(1).png)