Data Council Blog

Redshift versus Snowflake versus BigQuery / Part 1: Performance

Fivetran is a data pipeline that syncs data from apps, databases and file stores into our customers’ data warehouses. The question we get asked most often is “what data warehouse should I choose?” In order to better answer this question, we’ve performed a benchmark comparing the speed and cost of three of the most popular data warehouses — Amazon Redshift, Google BigQuery, and Snowflake.

Benchmarks are all about making choices: what kind of data will I use? How much? What kind of queries? How you make these choices matters a lot: change the shape of your data or the structure of your queries and the fastest warehouse can become the slowest. We’ve tried to make these choices in a way that represents a typical Fivetran user, so that the results will be useful the kind of company that uses Fivetran.

A typical Fivetran user might sync Salesforce, Zendesk, Marketo, Adwords and their production MySQL database into a data warehouse. These data sources aren’t that large: a terabyte would be an unusually large table. They are complex: they contain hundreds of tables in a normalized schema, and our customers write complex SQL queries to summarize this data. We’ve designed our benchmark to mimic this scenario.

The source code for this benchmark is available at https://github.com/fivetran/benchmark

How did we configure the warehouses?

We set up both Redshift and Snowflake in a $2 / hour configuration [1]. We used BigQuery in on-demand pay-per-query mode [2].

What data did we query?

We generated the the TPC-DS [3] dataset at 100GB scale. TPC-DS has 24 tables in a star schema; the tables represent web, catalog, and store sales of an imaginary retailer. The largest fact table had 400 million rows [4].

What queries did we run?

We ran 99 TPC-DS queries [5] in July of 2017. These queries are complex: they have lots of joins, aggregations, and subqueries. We ran each query only once, to prevent the warehouse from simply caching results and returning instantly.

How did we tune the warehouse?

Redshift, Snowflake, and BigQuery each offer advanced features like sort keys, clustering keys, and date-partitioning. We chose not to use any of these features in this benchmark [6]. We did apply column compression encodings in Redshift; Snowflake and BigQuery apply compression automatically.

Results

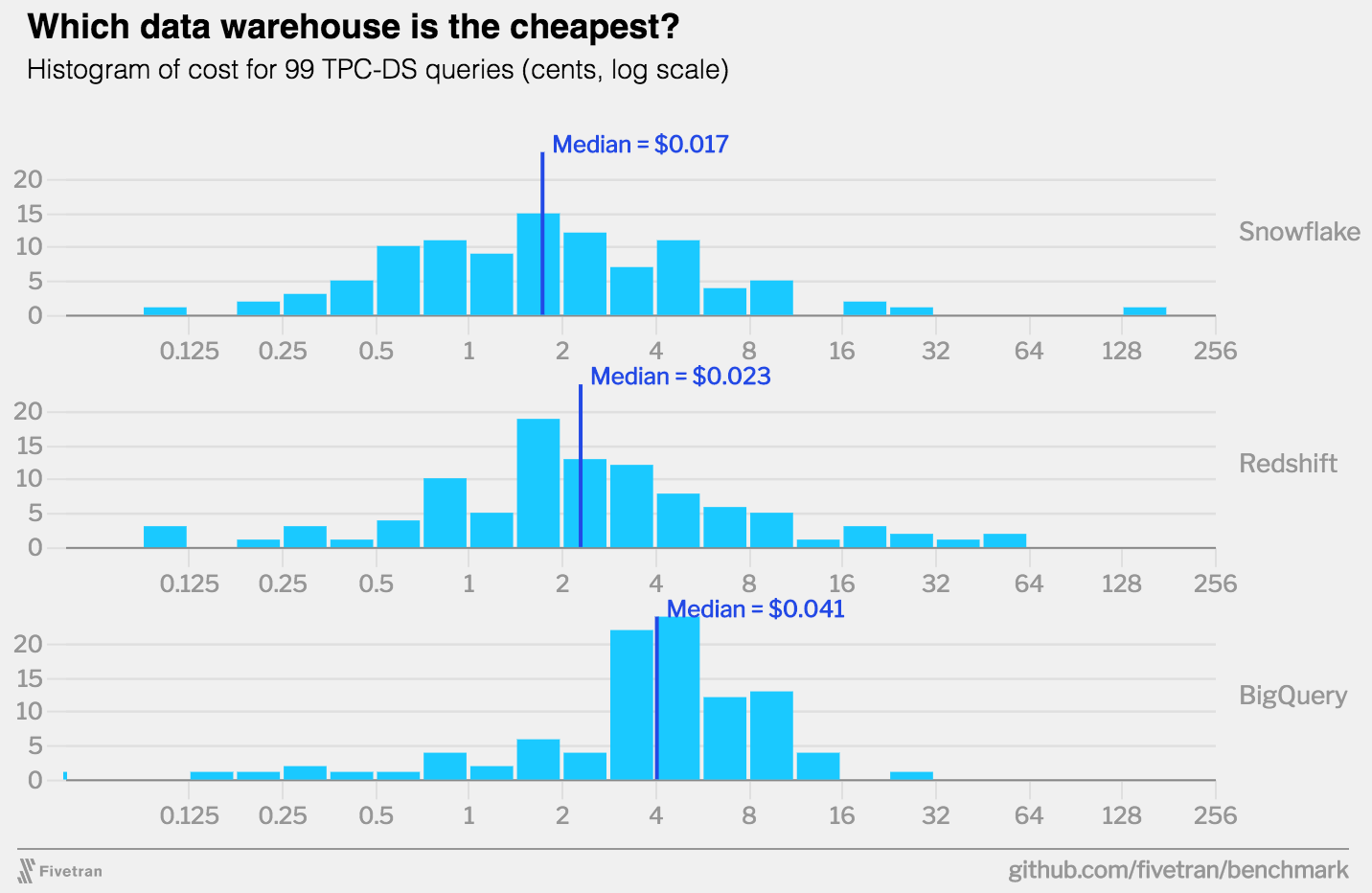

All warehouses had excellent execution speed, suitable for ad-hoc, interactive querying. The time differences are small; nobody should choose a warehouse on the basis of 7 seconds versus 5 seconds in one benchmark.

BigQuery charges per-query, so we are showing the actual costs billed by Google Cloud. To calculate cost-per-query for Snowflake and Redshift, we made an assumption about how much time a typical warehouse spends idle. For example, if you run a Snowflake XSmall warehouse for 1 hour at $2 / hour, and during that time you run 1 query that takes 30 minutes, that query cost you $2 and your warehouse was idle 50% of the time. On the other hand, if you run two 30-minute queries and the warehouse spends 0% of the time idle, each query only costs you $1. We looked at the actual usage data of a sample of Fivetran users with Redshift warehouses. The median Redshift cluster was idle 82% of the time [7].

According to this assumption [8], BigQuery is about twice as expensive as the other warehouses. However, this comparison depends heavily on our assumption about idleness. If you have a very “spiky” workload, BigQuery would be much cheaper than Redshift or Snowflake. If you have a very “steady” workload, BigQuery would be much more expensive.

Why are our results different than previous benchmarks?

Amazon’s Redshift vs BigQuery benchmark

In October 2016, Amazon ran a version of the TPC-DS queries on both BigQuery and Redshift. Amazon reported that Redshift was 6x faster and that BigQuery execution times were typically greater than 1 minute. The key differences between their benchmark and ours are:

- They used a 100x larger dataset (10TB versus 100 GB) and a 19x larger Redshift cluster ($38.40 / hour versus $2 / hour).

- They tuned the warehouse using sort and dist keys, whereas we did not.

- BigQuery Standard-SQL was still in beta in October 2016, it may have gotten faster by July 2017 when we ran this benchmark.

Benchmarks from vendors that claim their own product is the best should be taken with a grain of salt. There are many details not specified in Amazon’s blog post. For example, they used a huge Redshift cluster — -did they allocate all memory to a single user to make this benchmark complete super-fast, even though that’s not a realistic configuration? We don’t know. It would be great if AWS would publish the code necessary to reproduce their benchmark, so we could evaluate how realistic it is.

Periscope’s Redshift vs Snowflake vs BigQuery benchmark

Also in October 2016, Periscope Data compared Redshift, Snowflake and BigQuery using three variations of an hourly-aggregation query that joined a 1-billion row fact table to a small dimension table. They found that Redshift was about the same speed as BigQuery, but Snowflake was 2x slower. The key differences between their benchmark and ours are:

- Their dataset was somewhat larger

- Their queries were much simpler than our TPC-DS queries

The simpler queries are the big difference here. The problem with doing a benchmark with “easy” queries is that every warehouse is going to do pretty well on this test; it doesn’t really matter if Snowflake does an easy query fast and Redshift does an easy query really really fast. What matters is whether you can do the hard queries fast enough.

Periscope also compared costs, but they used a somewhat different approach to calculate cost-per-query. Like us, they looked at their customer’s actual usage data; but instead of using percentage-of-time idle, they looked at the number of queries-per-hour. They determined that most (but not all) Periscope customers would find Redshift cheaper, but it was not a huge difference. This is similar to our results.

Mark Litwintschik’s 1.1 billion taxi-rides benchmark

Mark Litwintshik benchmarked BigQuery in April 2016 and Redshift in June 2016. He ran 4 simple queries against a single table with 1.1 billion rows. He found that BigQuery was about the same speed as a Redshift cluster about 20x bigger than the one we tested ($41 / hour). Both warehouses completed his queries in 1–3 seconds, so this probably represents the “performance floor”: there is a minimum execution time for even the simplest queries.

Conclusion

These three warehouses all have excellent price and performance. We shouldn’t be surprised that they are similar: the basic techniques for making a fast columnar data warehouse have been well-known since the C-Store paper was published in 2005. These three data warehouses undoubtedly use the standard performance tricks: columnar storage, cost-based query planning, pipelined execution, and just-in-time compilation. We should be skeptical of any benchmark claiming that one of these warehouses is more than 2x faster than another.

For most users, the qualitative difference between these warehouses will matter more. We will address these in part 2!

Notes

[1] $2 / hour corresponds to 8 dc1.large nodes for Redshift and an XSmall virtual warehouse for Snowflake.

[2] BigQuery is a pure shared-resource query service, so there is no equivalent “configuration”; you simply send queries to BigQuery, and it sends you back results.

[3] TPC-DS is an industry-standard benchmarking meant for data warehouses. Even though we used TPC-DS data and queries, this benchmark is not an official TPC-DS benchmark, because we only used one scale, we modified the queries slightly, and we didn’t tune the data warehouses or generate alternative versions of the queries.

[4] This is a small scale by the standards of data warehouses, but most Fivetran users are interested data sources like Salesforce or MySQL, which have complex schemas but modest size.

[5] We had to modify the queries slightly to get them to run across all warehouses. The modifications we made were small, mostly changing type names. We used BigQuery standard-SQL, not legacy-SQL.

[6] If you know what kind of queries are going to run on your warehouse, you can use these features to tune your tables and make specific queries much faster. However, typical Fivetran users run all kinds of unpredictable queries on their warehouses, so there will always be a lot of queries that don’t benefit from tuning.

[7] Some readers may be surprised these Redshift clusters are idle most of the time. Fivetran users tend to use their warehouses for interactive queries, where a human is waiting for the result, so they need all queries quickly. To achieve this performance target, you need to provision a large warehouse relative to the size of your data, and that warehouse is going to be idle most of the time. Also, note that this doesn’t mean these warehouses are doing nothing for hours; it means that there are many small gaps of idleness interspersed between queries.

[8] The formula for calculating cost-per-query is [Query cost] = [Query execution time] * [Cluster cost] / (1 — [Cluster idle time])

Check out George's upcoming DataEngConf SF '18 talk where he dives deep into more detail on this topic. This post was originally published on Fivetran's blog.

Subscribe to Email Updates

Receive relevant content, news and event updates from our community directly into your inbox. Enter your email (we promise no spam!) and tell us below which region(s) you are interested in:

Fresh Posts

Categories

- Analytics (15)

- Apache Arrow (3)

- Artificial Intelligence (7)

- Audio Research (1)

- big data (7)

- BigQuery (2)

- Careers (2)

- Data Discovery (2)

- data engineer salary (1)

- Data Engineering (46)

- Data Infrastructure (2)

- Data Lakes (1)

- Data Pipelines (6)

- Data Science (33)

- Data Strategy (14)

- Data Visualization (6)

- Data Warehouse (10)

- Data Warehousing (2)

- Databases (4)

- datacoral (1)

- disaster management (1)

- Event Updates (12)

- functional programming (1)

- Learning (1)

- Machine Learning (18)

- memsql (1)

- nosql (1)

- Open Source (21)

- ops (1)

- postgresql (1)

- Redshift (1)

- sharding (1)

- Snowflake (1)

- Speaker Spotlight (5)

- SQL (2)

- Startups (12)

.png?width=347&height=97&name=Data%20Council%20AI%20logo%20(1).png)